Solving Citation Networks with Large Language Models

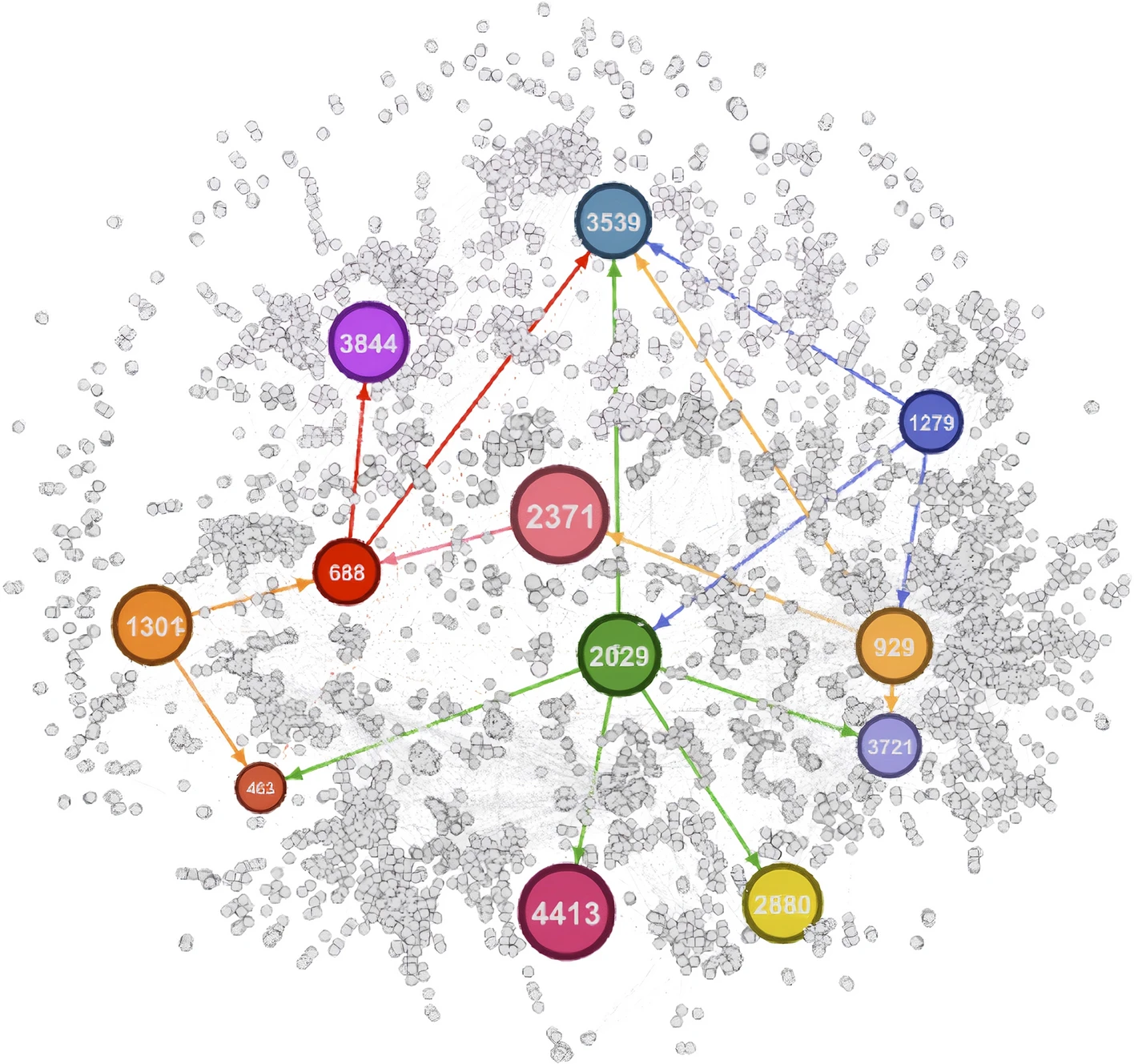

The three citation networks cora, citeseer, and pubmed are fundamental and well-known datasets in graph machine learning. In each of those datasets nodes represent papers and two papers are connected if they cite each other. The machine learning task is to predict the area of a paper based on the citation structure and a number of keywords extracted from the abstract of the paper.

In this project, we want to focus on the keywords attached to every node and completely ignore the citation structure. The idea is that modern large language models such as ChatGPT should be able to extract enough information from the abstract alone to correctly predict the area of the paper.

From the technical side, we would like to build upon Facebook’s LLAMA3 or derived models trained by the community.

Requirements

- Deep learning fundamentals