Developing neural network using local learning rules based on Partial Information Decomposition

Background

This is a thesis project in collaboration with Viola Priesemann’s lab at the Max Planck Institute for Dynamics and Self-Organization.

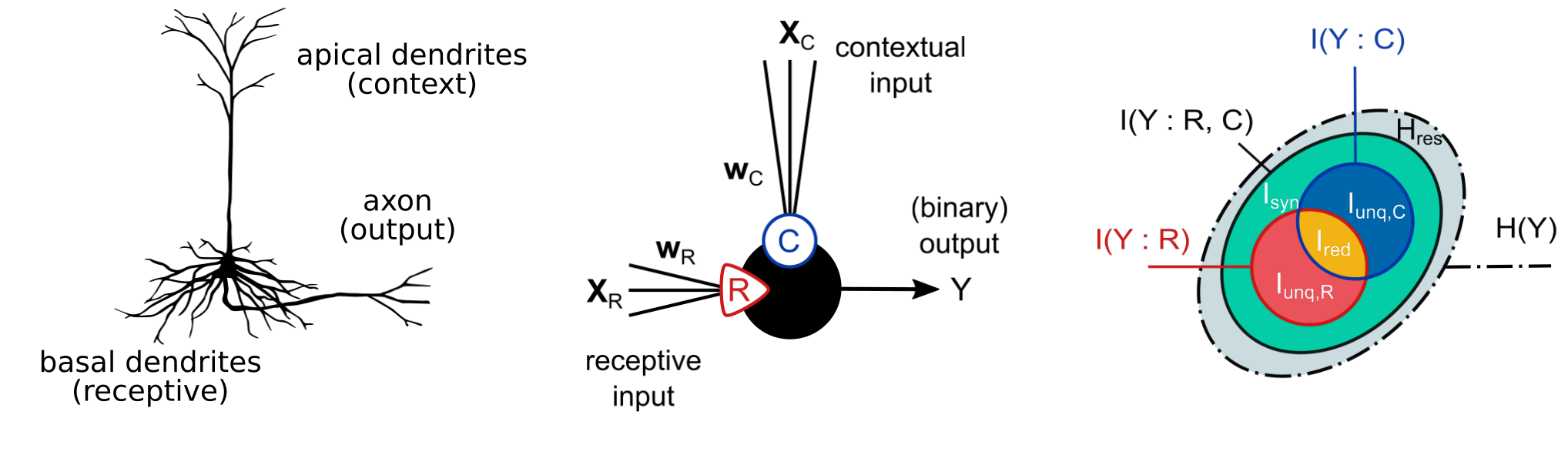

Artificial and living neural networks rely on comparably simple computational units; however, the role of neuron-level computations within the network remains unclear. To identify and validate these principles, we have developed biologically inspired “infomorphic” networks that learn using interpretable, information-based neuron-level objective functions. So far, we have demonstrated that these networks can solve task from various learning paradigms [1], and match the performance of end-to-end backpropagation [2] and state-of-the-art learning rules [3] in associative memory networks, while providing insights into the local principles of learning and computation.

Master’s thesis

For the next phase of this successful line of research, we are looking for students interested in expanding our work, for instance

- investigating correlation-based alternatives to information-theoretic neural goals

- developing feedback-driven learning for deep networks

- building networks for multimodal feature learning (for example representing redundancies between image and audio sequences)

These projects are ideal for students with a background in data science or computer science (or a related field, such as physics) who enjoy working with machine learning models and want to understand how and why they work, and how they relate to biological neural networks.

Requirements

- Familiar with python for machine leanring (pytorch)

- Basic understanding of and interest in probability theory and/or information theory

Literature

[1] Makkeh, A., Graetz, M., Schneider, A. C., Ehrlich, D. A., Priesemann, V., & Wibral, M. (2025). A general framework for interpretable neural learning based on local information-theoretic goal functions. Proceedings of the National Academy of Sciences, 122(10), e2408125122. https://doi.org/10.1073/pnas.2408125122

[2] Schneider, A. C., Neuhaus, V., Ehrlich, D. A., Makkeh, A., Ecker, A. S., Priesemann, V., & Wibral, M. (2025). What should a neuron aim for? Designing local objective functions based on information theory [Oral presentation]. Thirteenth International Conference on Learning Representations (ICLR 2025), Singapore. https://doi.org/10.48550/arXiv.2412.02482

[3] Blümel, M., Schneider, A. C., Neuhaus, V., Ehrlich, D. A., Graetz, M., Wibral, M., Makkeh, A., & Priesemann, V. (2025). Redundancy maximization as a principle of associative memory learning. arXiv. https://doi.org/10.48550/arXiv.2511.02584